One of the advantages of having released the Llama2 model open source is that the community (this includes all of the tech community: companies, universities, researches and individuals, among others), could make experiments, research and improvements over the project, and also share their outcomes. This led to the implementation of innovative techniques in order to be able to use and train the model using much less hardware requirements, along with a range of Python libraries. Among this techniques we can mention Quantization and LoRA as the most important ones. It is also worth mentioning Ollama, a tool that make use of this techniques to run large language models locally.

As we mentioned in another article, deploying or using the LLama2 full models implies the availability of several GPUs required to perform inference. This makes it very difficult to use the model locally without hosting it on platforms like AWS or Hugging Face. Hosting on these platforms costs money and defeats the purpose of using a free and open-source model. If you're thinking about spending money, it is preferable to use GPT-3, whose API use is less expensive than paying for a hosting service.

LoRA

Training or fine-tunning a model with billions of parameters, such is the case of LLMs, is very costly. Every weight has to be updated in every train step of the algorithm, which require hours of processing and expensive hardware. But sometimes we start from the basis of an already trained model and we want to keep it’s knowledge base and continuing training it or fine tunning it from that point. When that is the case maybe we don’t need to update EVERY weight, but only a subset of them, so some of the original parameters preserve the knowledge.

LoRA (Low-Rank Adaptation of Large Language Models), is a technique that aims to frozen the pretrained weights and add pairs of new weight matrices (called update matrices) to existing weights, and only trains those added weights. This reduces drastically the number of weights to be updated, from billions to millions, enabling us to run fine-tuning over an LLM with only one regular accesible GPU. Many of those GPUs are free to use on many cloud notebooks like Google Colab or Kaggle.

HuggingFace already provides an experimental feature to use LoRA over a variety of models. Another HuggingFace library more oriented to language models is PEFT (Parameter-Efficient Fine-Tuning), which supports LoRA and many other methods to fine tune models like Llama2 with low computational and storage costs.

Quantization

An important aspect of the cause of the cost of training large models is weight representation in memory a.k.a numerical precision. Larger and more accurate precision implies more storage space at the moment of saving the model, more computational and resources when performing inference or training, and, consequently, more energy and time costs.

Quantization attacks this problem by representing model weights and activations with low precision data types (for example 8-bit-float or 16-bit-float) instead of the 32 bit floating point precision that usually have large language models. This technique is not cost free: accuracy with lower precision data types may not be as high as with the full representation data types. There is a trade off between accuracy we want to achieve and the model size and inference times and cost. With 8-bit or less precision data type representation is even possible to perform inference on LLM models locally, without the need of any GPU.

There are many quantized Llama2 model’s already upload to HuggingFace, free to use and with many model options. For example, an user called The Bloke, has uploaded several versions, including the LLama2 with 7b parameters models, optimized for chat, from 2 to 8-bit quantization levels. They can be used locally using Python with the Transformers library, or langchain’s CTransformers module.

Code example

Here is a bit of Python code showing how to use a local quantized Llama2 model with langchain and CTransformers module:

CODE: https://gist.github.com/santit96/be816e2abf1b9120fdcefebeb05ccd25.js?file=langchain_local_llm.py

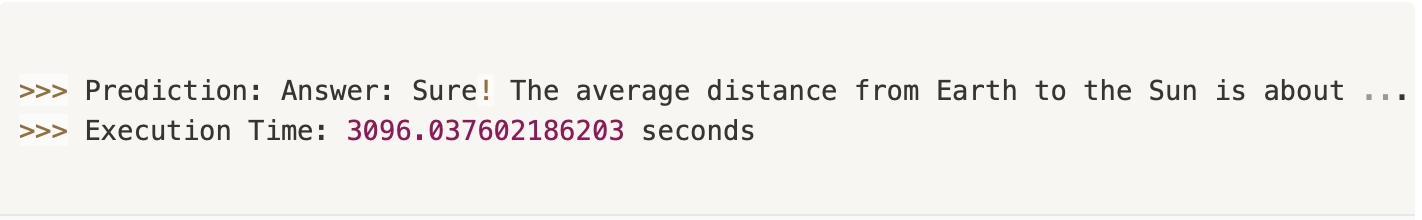

It is possible to run this using only CPU, but the responses times are not great, they are very high in most of the cases, which makes this not ideal for production enviroments that needs real time responses. If the running time of that algorithm is calculated:

CODE: https://gist.github.com/santit96/be816e2abf1b9120fdcefebeb05ccd25.js?file=langchain_local_llm_time.py

It is clear that it is not very usable. Maybe an 8-bit quantization model is still too big for the machine where it was executed (on an Mac M1 Pro ). Trying with another CPU architecture or changing the model for an 6 or 4-bit quantization one (if there isn’t a GPU available) could improve time performance.

Ollama

Ollama uses the power of quantization and Modelfiles, a way to create and share models, to run large language models locally. It optimizes setup and configuration details, including GPU usage. A Modelfile is a Dockerfile syntax-like file that defines a series of configurations and variables used to bundle model weights, configuration, and data into a single package.

For example, to run and use the 7b parameters version of Llama2:

- Download Ollama

- Fetch Llama2 model with ollama pull llama2

- Run Llama2 with ollama run llama2

The 7b model require at least 8GB of RAM, and by default Ollama uses 4-bit quantization. It is possible to try with other quantization levels by changing the tag after the model name, for example olma run llama2:7b-chat-q4_0. The number after the q represents the number of bits used for quantization.

Response times are acceptable:

And although its accuracy it is not as high as the full 7b model, it is a great alternative for language task that are not that complex. It has also an supported by Langchain, so it can be easily integrated with any python app.

Conclusion

In conclusion, the release of the Llama2 model as an open-source project has allowed the tech community to experiment, conduct research, and make improvements. Techniques like Quantization and LoRA have re-emerged, enabling the use and training of the model with reduced hardware requirements and some Python libraries. Ollama, a tool that leverages these techniques, allows for running large language models locally. While deploying the full Llama2 models may require multiple GPUs, the availability of quantized models and the use of tools like Ollama provide cost-effective alternatives for utilizing and fine-tuning Llama2. These advancements make it possible to use Llama2 for various language tasks without solely relying on expensive hosting services or extensive hardware resources.