The core idea of agents is to create an entity that decides, from an input or context, which sequence of actions to take—in essence, determining which function or tool to use at runtime. Although not a new concept, with the support of large language models (LLMs), we can now use a language model as a reasoning engine to decide the sequence and type of actions to take.

Through this article i will show you how to create an agent along with its tools using LangChain and GPT 3.5.

Why use Agents?

The use of agents can help us achieve certain tasks that LLMs alone struggle with and that cannot be solved by prompt engineering alone.

Agents potentiate LLMs on two crucial issues:

- Decomposing a task into simpler, more manageable components is more effective than trying to solve everything with a single prompt.

- For certain tasks, it is preferable to use tools other than LLMs.

To address these issues, we can use several prompting techniques to implement something like an agent, such as Chain-Of-Thought, ReAct, and Automatic Reasoning and Tool-use (ART). Raising the bar, some LLMs offer us function calling capabilities. Function calling is the first step toward enabling LLMs to recommend a list of functions for specific tasks. The first to implement function calling was OpenAI’s GPT; these capabilities were transformed into “tools” when GPT Assistants emerged, taking function calling and other features to another level by allowing Chat GPT to utilize them directly, rather than merely suggesting what to use

LangChain Agents

GPT Assistants are great, but what if we want to build our own agent that may use GPT or another LLM, and where we can easily declare our functions or tools using simple Python code and integrate it smoothly with our app? This is where LangChain comes into play.

LangChain enables us to easily create an app powered by an LLM Agent. It features a dedicated module for Agents and incorporates numerous concepts and functionalities already implemented for us.

LangChain agents consist of three main components:

- Agents: These are the entities that receive user input and decide whether and which external function is needed to fulfill the request. Following the response from the tool(s) used, they can formulate an answer to the query. There are various types of agents, and depending on the LLM used and the type of task, we can select the most appropriate one. From agents that employ prompt techniques like ReAct to select proper tools, to more advanced ones that leverage the LLM's built-in capability for function calling, such as the tool calling agent. For more information go to Agents and Agent Types documentation.

- Tools: These are functions that agents use to provide more comprehensive responses. They should include a description explaining their purpose to the agent, along with an input schema definition to identify accepted parameters, their descriptions, and their types. In LangChain, these tools can be plain Python functions wrapped with a decorator that uses the function's docstring as the description and the Python type notation along with parameter names as the input schema. For more information go to the Tools documentation.

- Agent Executor: This component effectively runs the agent. It initiates the agent with user input, executes the selected action, passes the result back to the agent, and repeats this process until the agent no longer calls any tools and generates the final response.

Building Our Own Agent

Let's get hands-on and start coding our own agent.

We'll begin by creating the Agent itself, opting for the OpenAI Tools Agent. To do so, we need to define the prompt, specify the LLM we want to use (making sure that it supports tool calling, so we will opt for GPT-3.5-turbo), and outline the tools the agent will employ. We will leave the tool’s definition for later.

Then we proceed to define the tools.

First, we'll create a custom tool called joke_tool that will consistently respond with a joke to every query, powered by an LLM. Defining a tool is as simple as using the @tool decorator. It is essential to formulate a descriptive docstring and parameter name, with the correct type hint, as this is what the agent will use to determine whether to utilize that tool.

Let’s define the rest of the set of tools the agent will use. LangChain provides a wide variety of tools to use; you can explore them here. Along with our custom joke_tool we will employ the DuckDuckGoSearchResults tool to search the internet for current events, the YouTubeSearchTool to look for videos, and the WikipediaQueryRun tool inquire about any topic:

We now have the Agent and the tools, we only have to define the AgentExecutor, it is important to set handle_parsing_errors=True for the agent to handle when a tool fails to parse the parameters:

Now let's put it all together in a script! To call the agent, run invoke in the AgentExecutor with a dict with the key "input" and the user's query as the value. To get the output of the agent check the "output" field of the response:

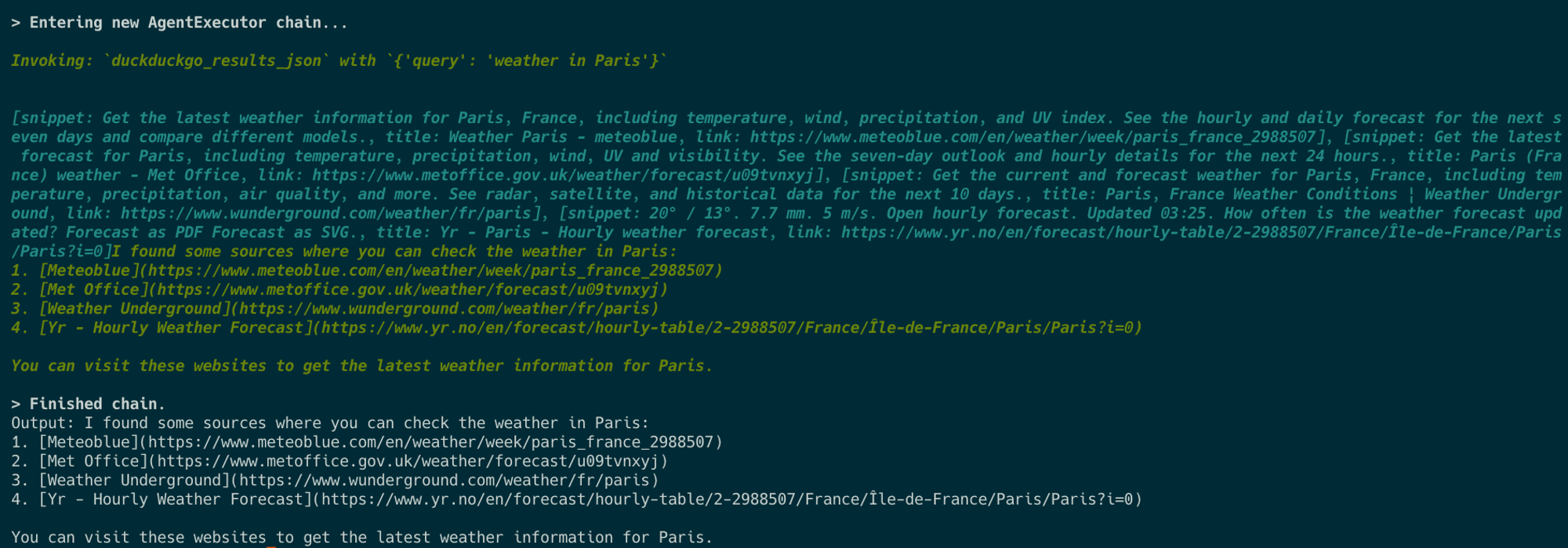

Let's examine some input/output examples to verify agent performance:

.png)

Bonus: get the tools used

In the example above, we could observe the utilized tools printed in the console due to setting verbose=True. However, how would we do if we wanted to get the list of tools used along with their responses and manipulate them within our application? To achieve this, we must include return_intermediate_steps=True in the agent executor. Subsequently, we can access the list of the intermediate steps, containing the tools employed and their corresponding responses.

Conclusion

This article has demonstrated the simplicity and effectiveness of creating agents with LangChain and integrating them with powerful linguistic models such as GPT-3.5. By leveraging LangChain's tools and functionalities, developers can quickly build custom agents targeted to specific tasks, improving task decomposition and tool utilization. This may be a starting point, as we have barely scratched the surface as far as agents are concerned. There is still much more to delve into, such as memory management or their internal implementation.